Definition of 36 segments of the car body with an accuracy of 94% of the area

Identification of 92% of 11 types of glass and car body damage

Filtration of 98% of heavily snowed and polluted vehicles

Request demo - API

Leave a request on the site and get a demo version for a month!

CarDamage Test

APPLICATION AREAS

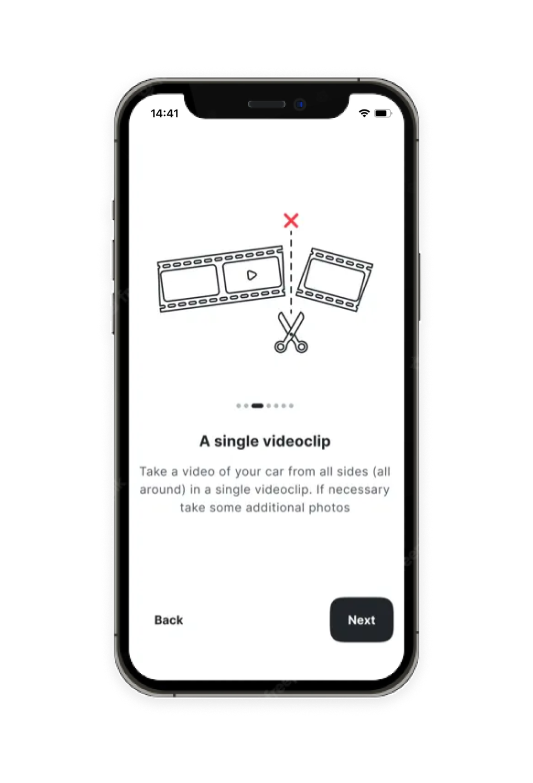

The service CarDamageTest is being developed to assess the technical condition of a car via video or photos and is capable of providing a customer with a full report on the damage detected, indicating that they belong to one or another part of the car's body. The service is a mobile application where a customer films/photographs a car, uploads data to the said app, and in a couple of minutes receives complete information about the damage found.

Initially, the service was conceived as an intelligent system to remotely assess the condition of a car for insurance purposes, when to obtain a policy, the owner needs to undergo a pre-insurance inspection, and in the event of an accident, assess the damage to receive insurance payouts. CarDamageTest, however, already possesses a functionality that allows it to be used for many companies in other areas of activity such as car sharing companies, taxi services, credit organizations for car loans, as well as many others.

The operations behind CarDamageTest consist of three stages: preprocessing of input photo and video images, car body part segmentation, and search for any damage.

The preprocessing stage consists of assessing the completeness of the input data (the presence of a car in a photo or video and all parts of the body), gauging the darkness and illumination of images, and checking the absence of a large amount of snow and dirt on the automobile in question. Preprocessing is implemented in the guise of a filtration funnel. During each step the portion of video frames or photos that don't meet the specified conditions is cut off. Only the remaining images are processed in each subsequent step. It is during the last preprocessing stage that the angle used to film/photograph the car is determined for each image in order to verify the presence of all the angles needed. The funnel was implemented using several types of deep learning neural networks that allows for a filtering accuracy of 98.5%. A full preprocessing cycle has been implemented for the customer that allows an answer regarding the validity of the uploaded data to be provided in mere seconds and doesn't require the uploading of photos and video images to the server until approved, thereby saving resources.

During the segmentation stage, the car body is "separated" into distinct parts to further determine the area where the damage was found. Overall, the service today can identify 35 types of segments, ranging from logos and antennas to the hood and various types of headlights/taillights. In order to implement this task an ensemble of neural network classifiers has been developed based on semantic segmentation and with additional pre- and post-processing. Automobile segmentation accuracy is currently 93% and doesn't come down even for rare car models like two-door sports cars, vintage limousines, and convertibles.

A unique classifier has been implemented in the system to search for damage. This is a cast of three algorithms trained in two stages: on separate damage class subsets and on a sample that encompasses images of all the classes combined. Both modern neural network models and classic machine learning algorithms are used. A combined sample was used to train the classifier, with the former consisting of real and artificial images generated by a neural network. We also developed and implemented our own augmentation called MosCut based upon the Albumentations library that reduces the variability of classes during training. Our service allows you to detect 11 damage classes, ranging from small paint chips and rust all the way to missing parts. Damage detection accuracy is 92%, one of the highest rates in the world.

Over the past few months we have significantly expanded our service's functionality, implementing the ability to determine the contours and depth of damage. The latter is a unique solution and significantly improves the service overall.

The final stage of the service is carried out in two steps. During the first, the damage detected is compared with the car segments in each image, that is to say segment-damage pairs are formed. At the same time, several instances of damage may correspond to one segment, while another may be located on a few segments. The second is the gathering of the damage data obtained from all of the images and the formation of the overall decision.

Besides the high accuracy in determining existing damage, our service's undisputed advantage is resistance to sunlight, raindrops, snow, and dirt, all of which allow you to avoid identifying non-existent damage and, accordingly, significantly improve the final result.

Expanded functionality of the service is planned in CarDamageTest's next updates. They include predicting internal damage to the car, evaluating the condition of the interior, and appraising tire condition.