How we learned to remotely assess the physical conditions of automobiles

Finolab is a globally-oriented technology firm with a large-scale vision. We develop, implement, and maintain information systems to solve the financial and technological issues of both large- and medium-sized businesses. In this article, we talk about the creation of a service by our team to remotely assess the physical condition of cars via AI-based technologies.

The first part of the article details the demands for and problems facing the remote assessment of car damage. The second portion discusses how we solved this task via the use of neural networks and classical machine learning, what obstacles we encountered, what results we achieved, as well as what still remains to be done.

Who needs online car insurance?

Every car owner experiences hurdles when signing up for liability or full-coverage car insurance. Both are time-consuming and costly: you have to file an application, wait for an appointment with an insurance agent, pay, and only then do you get a policy. In the event of an accident, receiving insurance payouts involves an even longer and headache-filled process: a report is filed, an accident assessor visits, then a drawn-out decision by the insurance company, and, potentially, litigation over the amount of compensation to be paid out. Those who use car sharing services are also confronted by various issues, such as companies finding so-called damages and billing them for repairs after they finish rides. The aforementioned examples shed light on the problems that exist when assessing vehicles, which both businesses and car owners look forward to being solved.

Why hasn't the task of automating the car damage inspection process been solved before now? Well, the functionality of smartphones has grown, the ability to work with big data sets has appeared, and large industries are faced with an urgent need to reduce costs by further automating processes.

We keep an eye out for innovations and communicate with a variety of businesses. So, we were among the very first on the global market to see a request for a new solution - an automatic assessment of a car's physical condition. Let's take a look at how we developed our service.

How we developed our service

Data preprocessing

The work to create the service began, as with any AI system, with an understanding of what we wanted the finished product to look like. Both insurance companies and existing car insurance regulations aided us a lot in this. All car damages are typically divided into 11 classes, ranging from minor paint chips to rust and missing parts. Most car bodies, excluding left and right sides, consist of 24 different segments, including head and tail lights, bumpers, hood, roof, various windows, and their supports, etc. Having decided upon these basic parameters, we then proceeded to train our model.

Types of detected damages

Types of detected damagesWhile the output data seemed quite simple to obtain, the same couldn't be said of high-quality input information – it required much more effort from us. We began by obtaining a data set of 250,000 images of cars, including both intact and damaged automobiles. Then we recruited a team of tracing experts to mark damages on the images and started training and exactly a week later we realized that one tagger could only process 12-15 images per shift, so we'd need years to mark the entire data set. Moreover, they found it extremely difficult to mark car images "from scratch."

To speed up the tagging process and make things simpler for our experts, we proposed and implemented the following solution: after having manually marked the first thousand images, the neural network was trained to do a rough mark up. Adjusting such mark ups takes on average 36% less time. Now that we had a fully marked data set of several thousand images, we further trained the GAN (generative adversarial network), added filters for neural network ensembles, and were able to generate synthetic marked-up images, the majority of which need minimal correction. From time to time, we checked the accuracy of image generation and revised them as necessary. Even during the first stages, this approach allowed us to reduce marking time 4.2-fold, and the constant fine-tuning of both neural networks on the newly labeled data permitted us to accelerate the tagging process more than 7-fold.

Our data set is currently a mixture of real and artificial cars that possess equal value in the development of the service. This solution is suitable for both image segmentation and damage tagging.

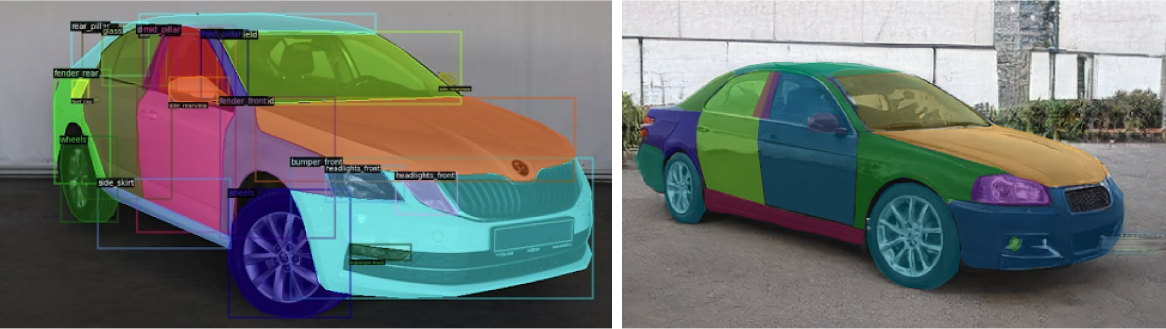

Manually segmented image vs. artificially segmented image

Manually segmented image vs. artificially segmented imageThe next step was to preprocess the data received from the client. Insurance companies traditionally assess a car based on five photos, which include the head and tail lights, as well as the roof. Yet, after analyzing the ease of use of such requirements for clients, the functionality of modern mobile apps, as well as a client's photography skills, we decided not to limit our customers to just photos. Instead, we decided to give them the opportunity to make an all-around video of a car.

Thus, when working with our service, a client can take a photo or record a 360-degree video (even showing just parts) of their car. In the event something is missing or of poor quality, we let them know and ask for additional photos/videos.

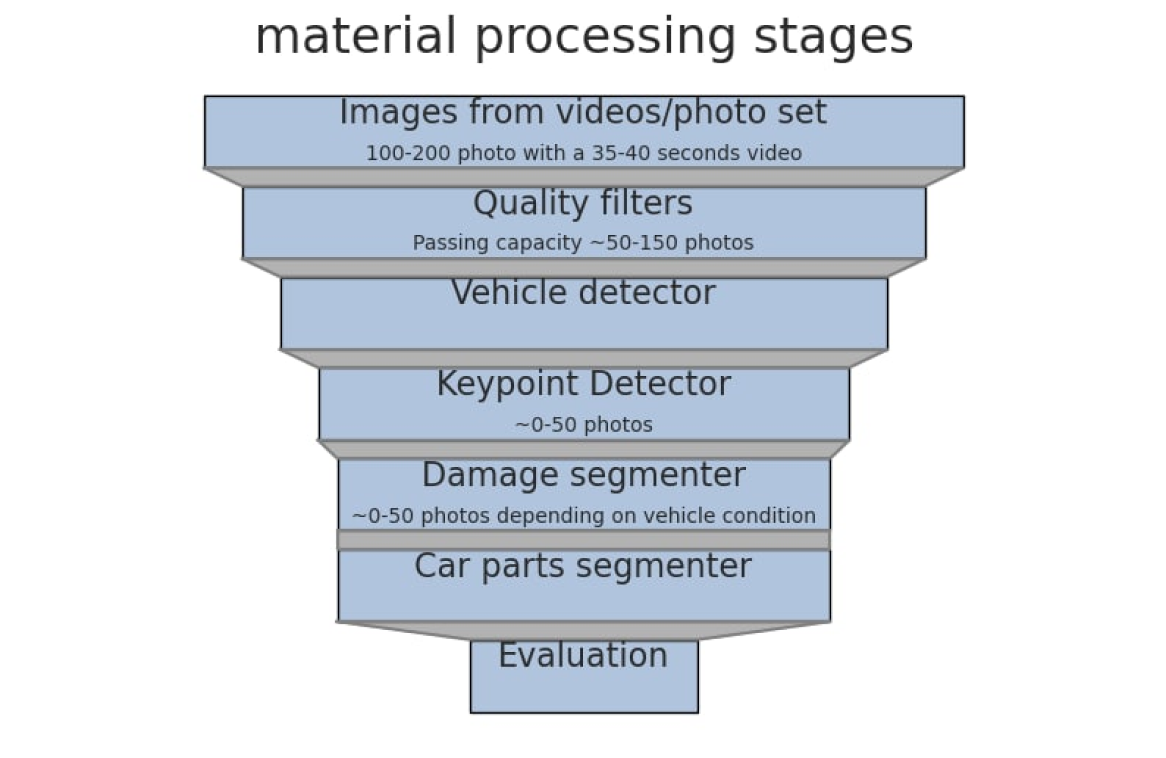

Aside from assessing the completeness of the input data (the presence of a car in a photo or video, as well as parts of the body) our service&apost;s data preprocessing includes an assessment of the darkness and light exposure of images, as well as an analysis of the presence of parts of the body on the materials provided. The firm also provides filtering of images where dirt and snow are present in accordance with the requirements of insurance companies for pre-insurance surveys. For this purpose, we developed a filtration funnel model using several types of deep learning neural networks capable of high-quality filtration within an extremely short period of time.

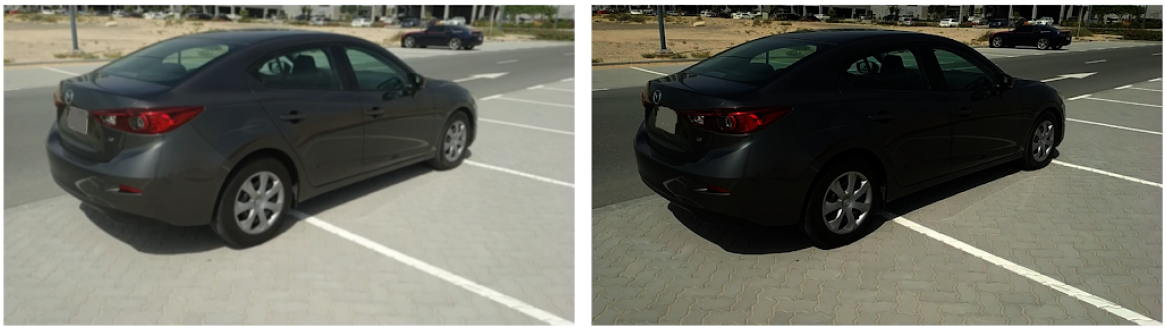

Blurred and dark images of cars rejected during the preprocessing stage

Blurred and dark images of cars rejected during the preprocessing stageThe preprocessing stage is completed by using the car identification model in space developed by our specialists that helps us not only to correctly determine the right and left sides of the vehicle, but also locate the position of the person shooting the video relative to the car with an error of no more than 5.3 degrees. Implementing this stage made it possible for us to ditch distinguishing between left and right front doors, left and right rear doors, etc. We were able to replace them with classes, such as front door, rear door, A-pillar, etc. In this way we lowered the number of segments from 36 to the previously mentioned 24, thereby improving the convergence of the algorithm.

Segmentation of car parts

To successfully solve the issue of car body part segmentation, several types of deep learning neural networks were tested at different stages of work on the service, such as two-stage Mask R-CNN and Faster R-CNN detectors and one-stage YOLO families with various metrics and parameter values. At first, however, we encountered a paradox: our service demonstrated better quality on five images than on video, where we had a hundred photos at our disposal. This contradiction can be put down to the psychology of motorists because as it turns out car lovers have both "favorite" and "unloved" angles when shooting their cars.

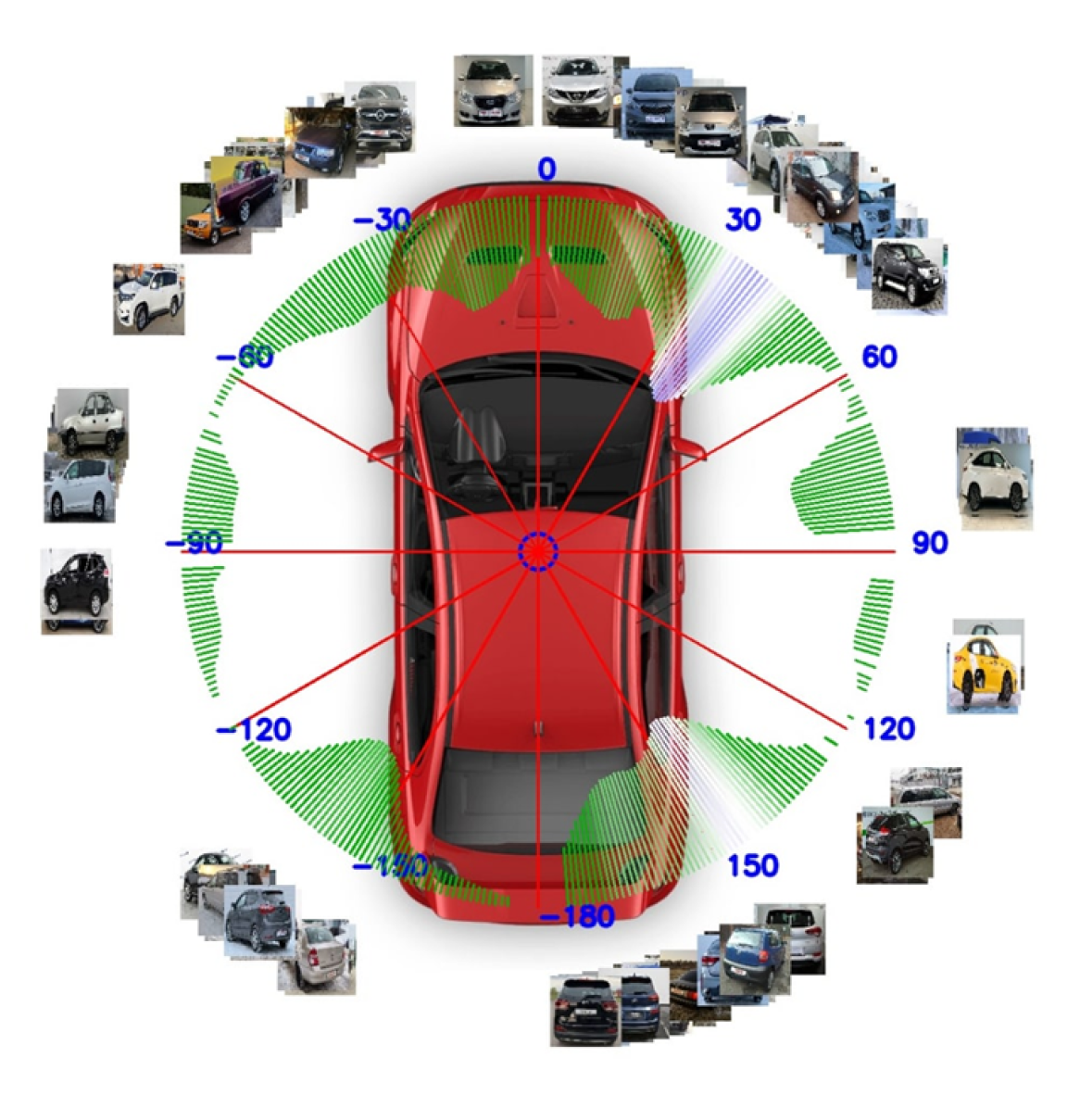

Distribution of the number of photos taken from different angles

Distribution of the number of photos taken from different anglesAs you can see, photos are usually taken from the passenger side at the head- and tail-light level.

By adding photos from "unloved" angles to the training data set and developing an ensemble of classifiers with additional pre- and post-processing, we achieved 90.5% accuracy for automated car segment detection.

Car segmentation

Car segmentationDamage segmentation also came with both expected and unexpected challenges. On the one hand, even the most experienced specialists are unable to mark a car image for damage perfectly because the human eye cannot always distinguish rust from dirt, or for instance, shadows from surrounding objects from scratches. On the other hand, the varied nature of the type of damage, fundamentally different classes of damage on glass and metal surfaces, as well as dirt, snow, raindrops, all kinds of shadows, reflections, and sun flare significantly complicated an already difficult task.

Examples of false damages

Examples of false damagesDamage classification

At present, the service's damage classifier is an ensemble of three algorithms trained both on subsets of damage classes and classes as a whole. To train the segmenter, we developed our own in-house augmentation called MosCut based on an Albumentations library, which reduces class variability during training. The advantage of the solution isn't only high-quality damage detection, but also a low percentage of false positives in instances when shadows, reflections, and dirt are mistaken for damage. We will elaborate on this issue in our next publications.

Scratch detection without detection of false reflections from marking lines and glare

Scratch detection without detection of false reflections from marking lines and glareThe final processing of the results obtained consists in comparing the damages found with those car segments in each image and aggregating the damage data obtained from all of the images.

In general, the process of our service (from uploading photos and video images to generating solutions) goes through the following stages:

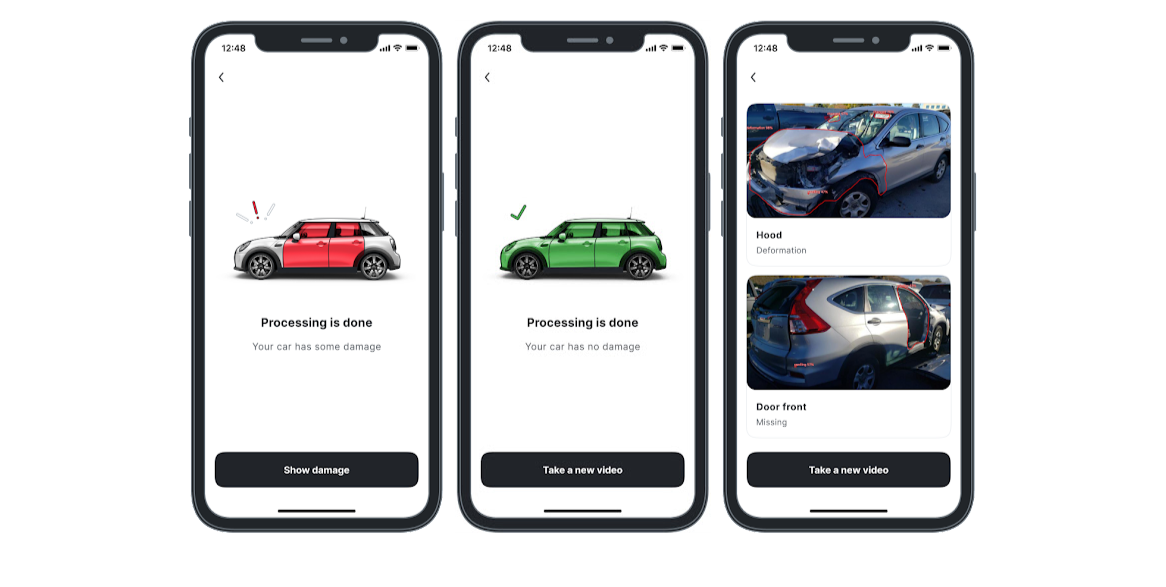

At the end of processing our service may display a message saying that no damage was detected or that damage was detected, and indicating the segments where they were found. Based upon the results, a textual description of the car assessment is created that meets the requirements of insurance companies, while the user receives an additional visual representation of the damage detected.

Service results

Service resultsWhat's next?

We currently offer our product as an app for mobile devices and API that can be integrated into a customer's IT system. The product has a competitive edge on the global market. It only takes a few minutes to process a car assessment request. The accuracy of damage classification by our service has reached 87% and is one of the highest results in the world today among remote car damage assessment systems.

But we're not resting on our laurels. We are now developing a host of innovative solutions to expand our service: we're finishing up testing on a repair cost calculation module, working on the possibility of assessing the physical condition of taxis and corporate transport, as well as adding new components for use in car sharing.

Our plans include creating a full-fledged digital auto insurance ecosystem to offer drivers a convenient and simple service, increase business transparency, and lower operating costs for insurance companies across the globe.